Scale-Out File Servers (SOFS) is a feature that is designed to provide scale-out file shares that are continuously available for file-based server application storage such as Hyper-V. Scale-out file shares provide the ability to share the same folder from multiple nodes of the same cluster.

In this blog we will go deeper into DNS Settings for Scale-out File Servers, I assume you already have played around with SOFS and know the basics.

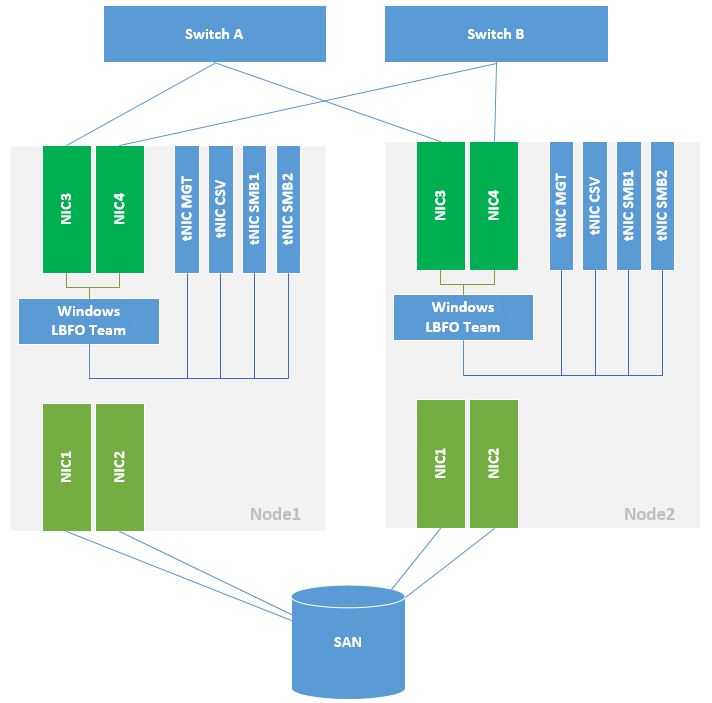

In this example I’m using a 2-node Windows Server 2012 R2 cluster with 4x 10GbE adapters.

NIC1 and NIC2 are dedicated for iSCSI traffic, used for the shared storage which is used to present LUNs to the SOFS cluster. These LUNs are then added as CSV and this is where the SMB3 shares are landing on.

NIC3 and NIC4 are converged using native NIC teaming with Team NICs for Management, CSV, and SMB Traffic.

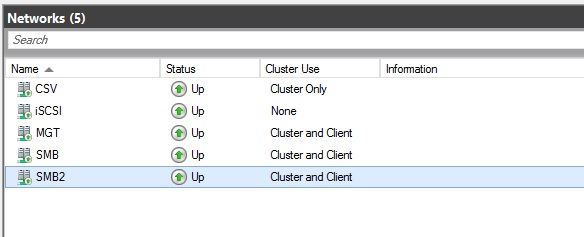

As shown below I have 3 cluster networks enabled for “Cluster and Clients”.

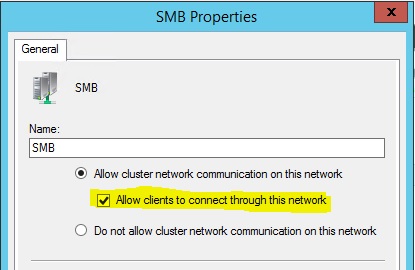

When creating your Client Access Point (CAP) for the SOFS, the cluster service will register all the network interface IPs, that have the “Allow clients to connect through this network” setting enabled, in DNS for the CAP.

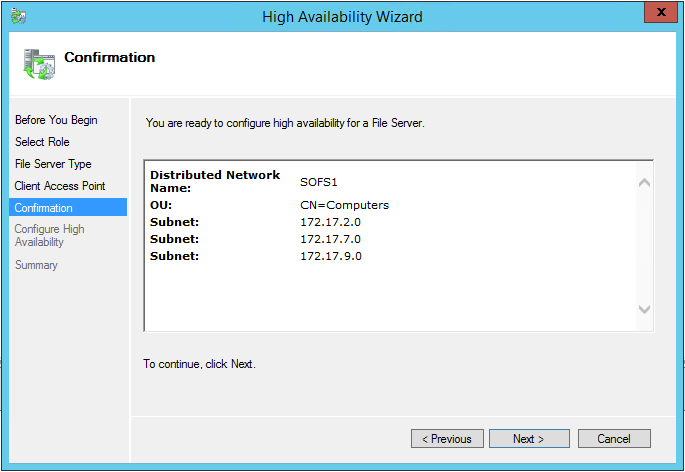

These networks are automatically detected in the Wizard:

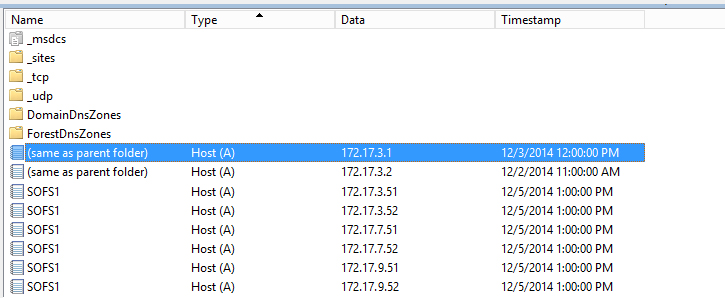

All clients that resolve the SOFS name, in my case “SOFS1”, will get Round-Robin an IP from DNS of the SOFS server.

This is fine for your (Hyper-v) clients in the SMB networks, but for clients only in the Management network this causes connectivity errors.

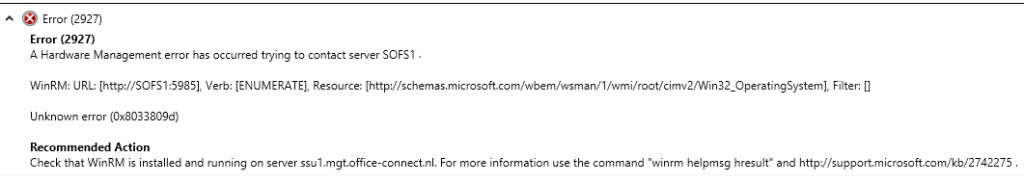

If you take Virtual Machine Manager for example, for every refresh it will lookup the DNS record and tries to connect and if not successful it will show error messages like:

This is because your clients in the management network are not able to communicate with the SOFS through the SMB networks, although it can receive an SMB IP address from the DNS server.

Since I have 2 cluster nodes, by default 6 records in total are added to the DNS zone for the CAP:

To overcome these problems there are a few options:

Option 1: Disabling DNS registration on SMB NICs

I always disable DNS registration on the SMB NICs thus the SOFS name is only registered in DNS with a management network IP.

When you uncheck the following setting on your SMB NIC, the cluster service will not register the IP in DNS, and your management clients will not connect to a SMB network IP.

Your clients will connect through the management network with the SOFS. When SMB traffic is initiated, SMB Multichannel will kick in and find the routes to the SOFS over the SMB networks.

Ofcourse our dear friend Powershell can help you with disabling the Register settings on your NICs:

Get-NetAdapter "SMB" | Set-DnsClient -RegisterThisConnectionsAddress:$false

You could get the following error message in the Failover cluster manager events:

Event ID 1196:

Cluster network name resource ‘Cluster Name’ failed registration of one or more associated DNS name(s) for the following reason:

This operation returned because the timeout period expired.

This is probaly because you forgot the disable the DNS registration on IPv6 as well.

When you use the Powershell command it disables it for IPv4 and IPv6.

Note: If you have identical NICs (same speed) you probably want to configure SMB Multichannel Constraints.

Option 2: ExcludeNetworks parameter

Although this is probably not the best method for you, because you will create the opposite situation of described above where the Management network cannot reach the SOFS, I still wanted it mentioned.

If we take a closer look at the SOFS cluster resource with Powershell there are a two interesting parameters that will catch the eye; InUseNetworks and ExcludeNetworks.

The InUseNetworks parameter contains the GUIDs of the Cluster Networks that are used for the SOFS recource.

The ExcludeNetworks parameter is empty by default and as the name of the property reveals, you are able to exclude networks that the resource may use.

The cluster will automatically update the DNS records if networks are excluded, so only IP addresses in the right subnet are registered in DNS.

If you still want to exclude the “MGT” network for being used for the SOFS Cluster Resource, this property can be set with Powershell:

# Get Cluster Resource $SOFS= Get-ClusterResource "SOFS1" # Get Cluster Network ID for "MGT" Network $NetworkID = (Get-clusternetwork -name "MGT").id # Set Cluster Network ID in ExcludeNetworks property Set-ClusterParameter -InputObject $SOFS -Name ExcludeNetworks -Value $NetworkID # Get Cluster Resource Parameters $SOFS | Get-ClusterParameter | ft -AutoSize

The ExcludeNetworks parameter is now filled with the GUID of the Cluster Network.

The ExcludeNetworks parameter is now filled with the GUID of the Cluster Network.

The “InUseNetworks” updates the value automatically, removing the GUID that is now present in the ExcludeNetworks parameter.

The resource in Failover Cluster Manager is also not showing the excluded network anymore.

The cluster also updates the DNS records so only IP addresses in the right subnet are registered in DNS.

SMB Multichannel Constraints

SMB Multichannel Constraints restricts which network can be used for SMB3 to a certain endpoint.

These constraints are configured on the client side and have to be configured for each client, and each SOFS endpoint individually.

The SMB3 protocol prioritizes NICs to use by default based on speed and RDMA capabilities.

If you have 2x 1GbE NICs for management and 2x 10GbE for SMB it will use the 10GbE NICs first before sending traffic over the 1GbE NICs, although it is not excluded for use.

When having 2x 10GbE and 2x 10GbE RDMA NICs, the RDMA NICs will be preferred.

You probably only want to setup constraints if you have identical NICs (same speed) and/or if you want to force SMB traffic over certain NICs.

You can create a new SMB Multichannel Constraint using the New-SmbMultichannelConstraint cmdlet

New-SmbMultichannelConstraint -ServerName SOFS1 -InterfaceAlias “vEthernet (SMB)”, “vEthernet (SMB2)”

CAUTION: This constraint is only for the Netbios name “SOFS1”.

You will need to create an additional constraint for the FQDN!

Using the Get-SmbMultichannelConnection will show the SMB connections are constrained to the right interfaces. You will need to generate some SMB3 traffic to kickstart SMB Multichannel.

If you have any questions or feedback, leave a comment or drop me an email.

Darryl van der Peijl

http://www.twitter.com/DarrylvdPeijl

Hello Darryl,

we have a very strange behavior in our S2D Cluster concerning SMB (Multichannel) Connections.

When i check the onnections with , then i get the following result:

ClientIpAddress ServerIpAddress ServerRdmaCapable ClientRdmaCapable Failed

————— ————— —————– —————– ——

10.101.0.2 10.101.32.4 True False True

10.101.0.2 10.101.16.5 True False True

10.101.0.2 10.101.16.6 True False True

10.101.0.2 10.101.16.3 True False True

10.101.0.2 10.101.32.3 True False True

10.101.0.2 10.101.16.4 True False True

10.101.32.2 10.101.32.5 True True False

10.101.16.2 10.101.16.7 True True False

10.101.16.1 10.101.16.8 True True False

10.101.32.2 10.101.32.5 True True False

10.101.16.2 10.101.16.7 True True False

10.101.16.1 10.101.16.8 True True False

10.101.16.1 10.101.16.3 True True False

10.101.32.2 10.101.32.3 True True False

10.101.16.2 10.101.16.4 True True False

10.101.0.2 is the management network. (routable)

10.101.16.1 and 10.101.16.2 is the storage network (not routable)

10.101.32.2 is the live migration network. (not routable)

some hosts try to connect via the management network to the storage and LM networks which cannot succeed.

Can you tell me why this happens and how i can suppress this?

SMB is trying every path it could. You can limit SMB multichannel connections using https://docs.microsoft.com/en-us/powershell/module/smbshare/new-smbmultichannelconstraint?view=win10-ps