Scale-Out File Server (SOFS) is a feature that is designed to provide scale-out file shares that are continuously available for file-based server application storage such as Hyper-V. Scale-out file shares provide the ability to share the same folder from multiple nodes of the same cluster.

In this blog we assume you already have played around with SOFS and know the basics.

There are multiple ways to connect storage to your SOFS cluster.

The most common way today is putting your SOFS cluster in front of an iSCSI or FC SAN, the upcoming method is using Storage spaces in combination with a “Just a bunch of disks” device also known as JBOD. We will cover them both in this blogpost.

Symmetric Storage

If the storage is equally accessible from every node in a cluster, it is referred to as symmetric storage.

Each node can take ownership of the storage in case of maintenance or failures which provides availability.

With symmetric storage, read and writes operations can be done by every node in the cluster (also referred to as “Direct IO”) however metadata operations must be done by the owner node which is orchestrating these operations.

This is probably the most common way (today) to provision storage to the SOFS cluster.

If a node in the fileserver cluster has NIC/HBA hardware failures and loses connectivity to the storage the traffic will be redirected to the other node.

The cluster will then act as if the storage is asymmetric and will use “Redirected mode” for the CSV disks, which comes in 2 ways:

File system redirect mode

In this mode I/O on a cluster node is redirected at the top of the CSV pseudo-file system stack over SMB to the disk. This traffic is written to the disk via the NTFS or ReFS file system stack on the coordinator node.

Block level redirect mode

Block level redirect mode activates when there is a hardware failure and the node does not have a connection to the storage anymore or due to an asymmetric cluster configuration.

In Block level redirected mode, I/O passes through the local CSVFS proxy file system stack and is written directly to Disk.sys on the coordinator node. As a result it avoids traversing the NTFS/ReFS file system stack twice (node and coordinator node).

For more information on the different Redirection modes please take a look at this blog post by Subhasish Bhattacharya on the Microsoft Clustering and High Availability team here: http://blogs.msdn.com/b/clustering/archive/2013/12/05/10474312.aspx

Asymmetric Storage

If one of multiple nodes in the cluster do not have the ability to read/write to the storage directly it is referred as asymmetric storage.

Although it might seem you do not want this to happen, this is working perfectly fine.

Examples of asymmetric storage:

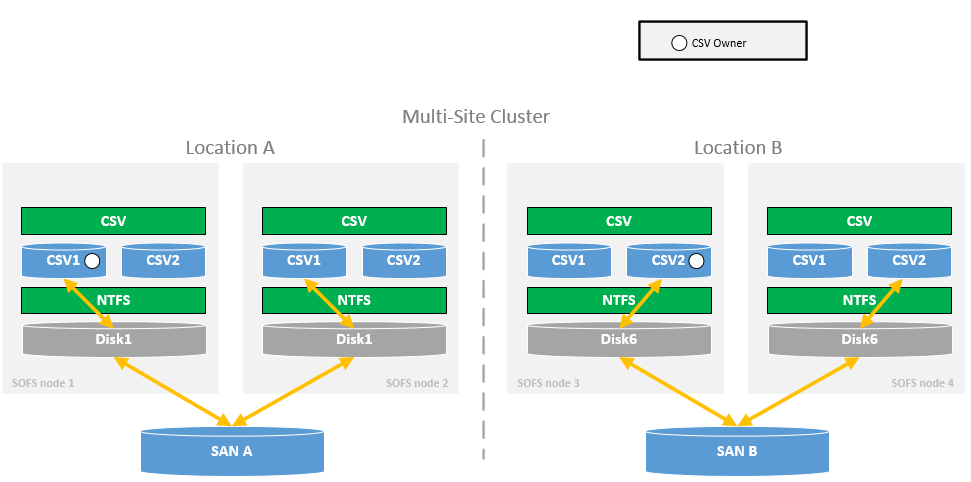

Multi-Site Cluster

You could use asymmetric storage when you create a cluster with 4 nodes spread over 2 locations.

Not all the nodes in the cluster can access all available storage in the cluster, the cluster nodes in location A have a different SAN connected than the node in location B.

If a Hyper-V server connects to CSV2 and the connection lands on SOFS node 1 due to DNS round robin, the CSV will block level redirect the traffic to the owner node of CSV2. In this case SOFS node 3.

The above is Windows Server 2012 RTM behavior, more on the 2012 R2 behavior later.

Parity or Mirrored Storage Spaces

Another example of asymmetric storage is using Parity or Mirrored storage spaces as shared storage in a Scale-Out File server cluster, where the CSV operates in a block level redirect mode.

With Mirrored storage spaces, copies of data are stored on different disks. Parity storage spaces uses one of its columns to store a parity-bit which can be used to reconstruct data from a failed disk. In both cases all reads, writes and metadata must be coordinated through the coordinator node which owns the CSV to guarantee data integrity.

Notice that on the node where the traffic is redirected to, the traffic skips the NTFS layer.

Learn more on the different types of storage spaces on here.

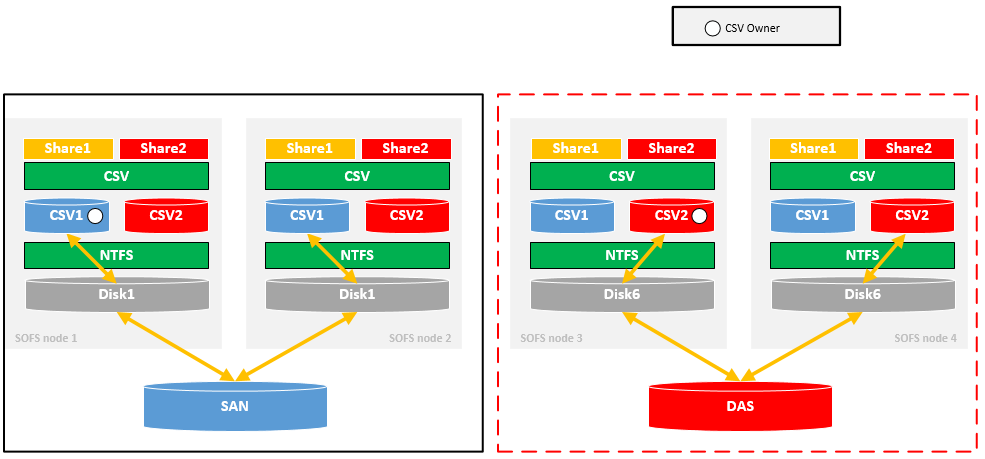

Scaling with Asymmetric Storage

Asymmetric storage works great for scaling-out your storage because you don’t need shared storage connected to all your cluster nodes. Think about a scenario where you start with a Two-node cluster and want to scale-out when necessary (Storage full / nodes out of bandwidth / … ).

If we need extra capacity in the cluster we can simply add a set of nodes to the cluster (“building block”) no matter where the storage is located (SAN / Storage Spaces) or if all the nodes in the cluster have a direct connection to the storage.

I’ve illustrated this in the image below where all the RED items are part of the new Building Block.

With Windows Server 2012 R2 you can scale-up your SOFS cluster to 8 nodes!

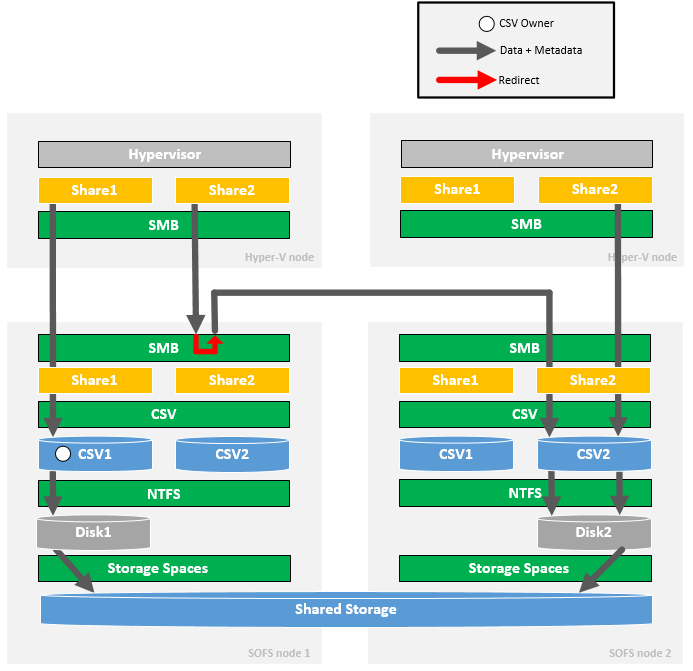

Asymmetric storage – CSV Rebalancing

Because all reads, writes and metadata must be coordinated through the coordinator node it is important to have your CSVs equally distributed across your cluster nodes.

If the CSVs are not equally distributed all traffic to your storage would go through one single node in a 2-node cluster and the other node would only be redirecting traffic which makes traffic flows inefficient. The following image shows the inefficient traffic flows.

In Windows Server 2012 R2 there is a build in feature called “SMB Client redirection” which takes care of this inefficient behavior. ( paragraph below )

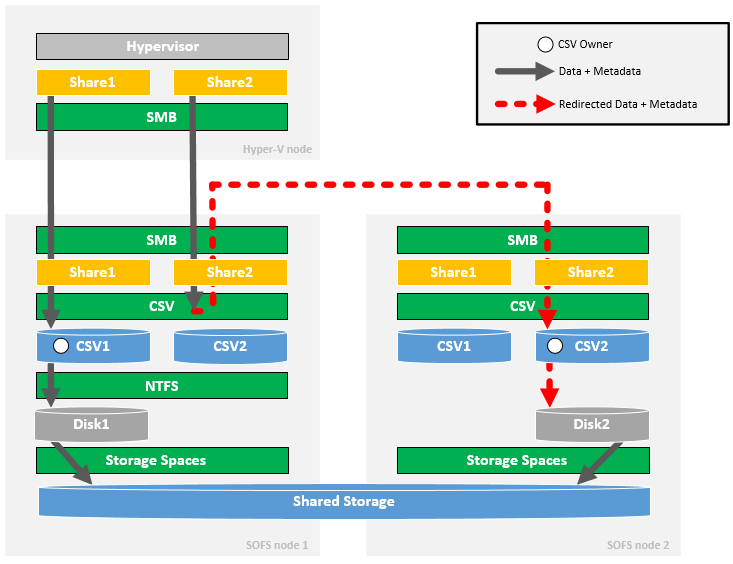

Asymmetric storage – SMB Client redirection

When a client hits a fileserver cluster node which not owns the CSV, the client will be redirected to the node that does own the CSV by the cluster witness server, since Windows Server 2012 R2.

With Windows Server 2012 the client would connect to a random fileserver cluster node and that node would redirect all data + metadata over the network to the owner node. ( see paragraph above )

The following diagram shows the behavior in Windows Server 2012 R2.

With this client redirection the traffic flow to the storage becomes much more efficient.

Because the client is always redirected to the owner node it has always the ability to do Direct I/O and the traffic does not have to be Block Level redirected the another node.

Symmetric storage – SMB Client redirection

The behavior described above only relates to asymmetric storage by default.

With symmetric storage the need of client redirection to the CSV owner is negligible, because Direct I/O is possible. The client will pick one fileserver cluster node by DNS and connect to that node for all shares. Metadata operations will be redirected to the CSV owner node.

You can override this setting and make the symmetric cluster use the same redirection behavior as an asymmetric cluster by using the following PowerShell cmdlet:

Set-ItemProperty HKLM:\System\CurrentControlSet\Services\LanmanServer\Parameters -Name AsymmetryMode -Type DWord -Value 2 -Force

This setting has to be configured on every fileserver cluster node and does not impact active sessions.

Shared Nothing Storage Spaces

In this blog I’ve discussed Windows Server 2012 and Windows Server 2012 R2.

With Windows Server vNext (coming in 2016) there will be a new feature introduced called “Shared Nothing Storage Spaces”.

In a future blogpost I will discuss this new feature and the way it handles client requests.

Special thanks to Anton Kolomyeytsev (Cluster MVP) and Didier van Hoye (Hyper-V MVP) for reviewing my blog!

If you have any questions or feedback, leave a comment or drop me an email.

Darryl van der Peijl

http://www.twitter.com/DarrylvdPeijl

If a node in the fileserver cluster has NIC/HBA hardware failures and loses connectivity to the storage the traffic will be redirected to the other node.

That is correct, assuming that there are network paths to the other node. This is called “Redirected Storage”

so, which one the best to used?

Please understand that this blog is over 4 years old. Take a look at Storage Spaces Direct at https://aka.ms/s2d