In this blogpost I’m testing out the new resiliency method which we can use with 2-node clusters in Windows Server 2019. This blog is not meant as performance showcase for the hardware but to show the differences between a regular Two-way mirror and nested resiliency volume.

Why do I want nested resiliency?

Nested resiliency helps two node clusters by saving more copies of the data on one node, thus creating more resiliency but in the meantime also taking more space. With two-way mirror we could get into trouble when we have hardware failures in both fault domains, with nested Resiliency we keep our volumes up and running!

Read the official documentation here.

Diving in

In this test I’m using the following hardware:

Nodes: 2x DataOn K2N-108 Kepler nodes

Drive Bay: 8x 2.5” NVMe per node

Processor: 2x Intel® Xeon® Silver 4110 2.1GHz 8-Core CPU per node

Memory: 128GB per node

Capacity Tier: 4x Intel P4510 1TB NVMe

Networking: Dual-Port SFP+ 25GbE RDMA (Direct connected)

CSV Cache: Disabled

So we’ve got our hands on an All-NVMe cluster, yes 🙂

One way or the other, our Silver CPU here is going to be the bottleneck of this stack.

I’m not pushing the CPUs to the max as this could impact the outcome of this test.

Performance Results

I’m using VMfleet to test the performance of the cluster, using 4 Threads and 30 outstanding IO.

Let’s review the results.

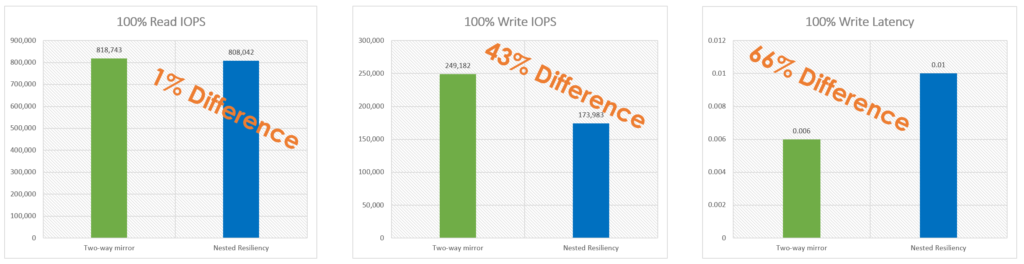

First, we start with 4 kB block size. Read IOPS are practically the same between two-way mirror and nested resiliency. This makes sense: with 2-node clusters, all reads are served locally, and no matter how many copies of data you have, you only need to read one of them. So we don’t expect a big difference in read performance. However, we do see a difference in write IOPS. This makes sense too: nested resiliency has to write extra copies on each server, which consumes more CPU cycles and more time than two-way mirror. Because we are writing four copies instead of two, latency increases as shown in the third graph. As we know, higher latency = fewer IOPS.

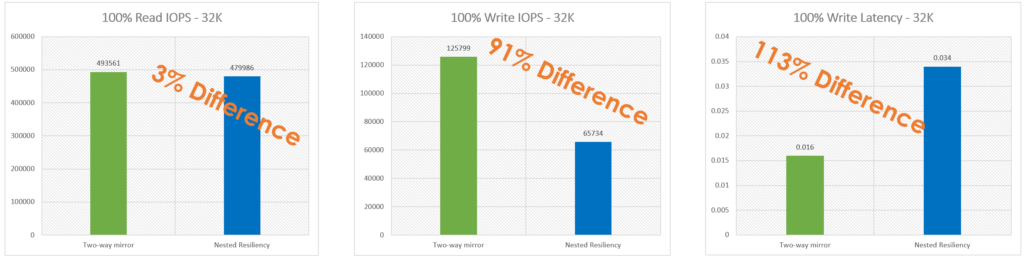

We can repeat these experiments with larger 32 kB block size. As you can see, the larger block size makes the effect more dramatic: reads are still about the same, but now we get just about half as many writes, and with more latency.

Storage is cheap, downtime is expensive

Yes, the performance will be less using nested resiliency instead of regular two-way mirroring. Yes, you will have less usable capacity when using nested resiliency. BUT, you’ll get something important in return: less worries.

The cluster stays up when Murphy’s Law strikes again.

—

Special thanks to DataOn Storage for using their hardware and Cosmos from the S2D team for reviewing my blog.

If you have any questions or feedback, leave a comment or drop me an email.

Darryl van der Peijl

http://www.twitter.com/DarrylvdPeijl

One thought to “Two-way mirror vs Nested resiliency”